Connection Multiplexing: HTTP/2 Stream Management for TTFB

Connection multiplexing has revolutionized the way modern web protocols handle data transmission, particularly with the advent of HTTP/2. This technology enables multiple data streams to share a single connection, significantly enhancing web performance and user experience. Delving into the mechanics of HTTP/2 stream management reveals how multiplexing optimizes page load speed and reduces latency, setting new standards for efficient web communication.

Understanding Connection Multiplexing and Its Role in HTTP/2 Performance

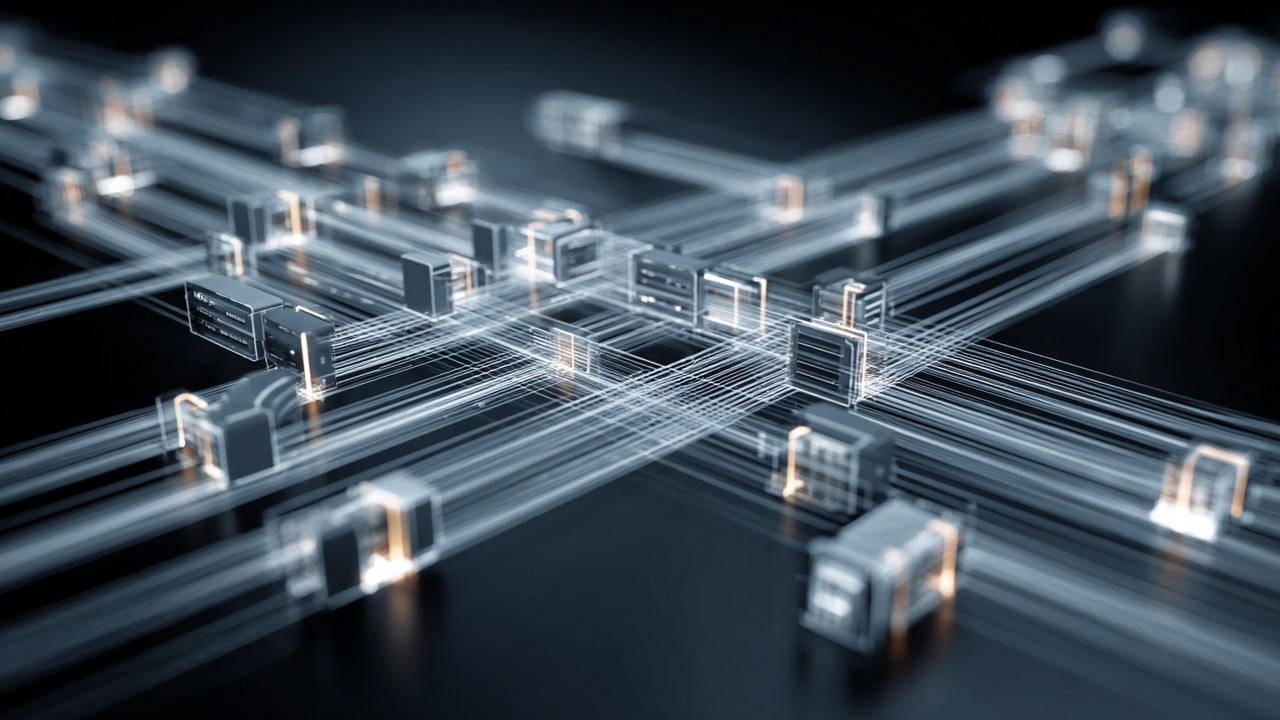

Connection multiplexing refers to the technique of sending multiple independent streams of data simultaneously over a single network connection. In the context of HTTP/2, this approach fundamentally changes how web clients and servers communicate compared to the older HTTP/1.1 protocol. Whereas HTTP/1.1 typically opens multiple TCP connections to handle parallel requests, HTTP/2 employs a single TCP connection over which multiple streams are multiplexed.

This shift is significant because HTTP/2 introduces the concept of streams—logical, independent channels within the same physical connection. Each stream carries a discrete sequence of frames that represent HTTP requests and responses. This HTTP/2 stream management allows browsers and servers to send and receive multiple requests concurrently without the overhead of establishing new connections for each.

The benefits of HTTP/2 multiplexing are profound. By consolidating multiple data exchanges into one connection, multiplexed connections reduce the latency caused by TCP connection setup and teardown. This reduction in overhead directly translates to faster page load times and improved responsiveness. Additionally, multiplexing avoids the limitations of HTTP/1.1’s serialized request handling, enabling more efficient use of available bandwidth.

An important aspect of connection multiplexing is its role in mitigating network congestion and packet loss impact. Since all streams share the same TCP connection, the protocol employs advanced flow control and prioritization mechanisms to ensure that critical resources load promptly even when network conditions fluctuate.

In practical terms, this means that modern websites leveraging HTTP/2’s multiplexed connections can deliver richer content more swiftly, enhancing user experience and satisfaction. The ability to manage multiple streams over a single connection also simplifies server resource management and reduces the likelihood of connection-related bottlenecks.

Overall, connection multiplexing embodies a core advancement in web protocol design. Its integration into HTTP/2 not only redefines stream handling but also sets a new baseline for how performance optimization is approached in web development. By enabling multiple simultaneous streams within a single TCP connection, HTTP/2 multiplexing plays a pivotal role in reducing latency, boosting page load speed, and driving the evolution of faster, more efficient web experiences.

How HTTP/2 Stream Management Influences Time to First Byte (TTFB)

Time to First Byte (TTFB) is a critical metric in web performance that measures the duration between a client’s request and the arrival of the first byte of the server’s response. This metric is not only essential for understanding page load speed but also plays a vital role in SEO rankings and user experience. Lower TTFB values typically indicate a more responsive server and network setup, which search engines reward with better visibility.

The relationship between HTTP/2 stream management and TTFB optimization is intrinsic. By leveraging multiplexing, HTTP/2 can handle multiple requests concurrently over a single connection, minimizing the delays that traditionally inflate TTFB in HTTP/1.1. In the earlier protocol, browsers often had to wait for one request to complete before initiating another due to head-of-line (HOL) blocking, which severely impacted TTFB.

HTTP/2 addresses this issue by allowing multiple streams to coexist and be processed independently. This multiplexed connection model significantly reduces the waiting time for the first byte of subsequent resources. For example, if a webpage requests CSS, JavaScript, and images simultaneously, HTTP/2 can send these requests in parallel streams without waiting for one to finish before starting the next.

Mechanisms such as stream prioritization and flow control further enhance the efficiency of this process. HTTP/2 stream management assigns priority levels to different streams, ensuring critical resources like HTML and CSS are delivered before less critical assets such as images or fonts. This prioritization directly impacts the TTFB by expediting the delivery of resources that affect the initial rendering of the webpage.

A key technical difference influencing TTFB is how HTTP/2 eliminates the HOL blocking problem prevalent in HTTP/1.1. In HTTP/1.1, if one packet in a connection is delayed or lost, all subsequent packets must wait, leading to increased TTFB. Conversely, HTTP/2’s multiplexed streams can continue independently, so delays in one stream do not hold back others.

Looking at real-world examples, websites that have transitioned to HTTP/2 often report significant improvements in TTFB. Case studies reveal reductions in TTFB by up to 30-40%, which translates into noticeably faster page load times and improved user engagement metrics. These improvements underscore the practical advantage of using HTTP/2 multiplexing to reduce TTFB.

In summary, HTTP/2’s advanced stream management optimizes TTFB by simultaneously handling multiple requests, prioritizing critical data, and overcoming the limitations of HTTP/1.1. This optimization not only enhances HTTP/2 performance but also contributes to better SEO outcomes by delivering faster, more responsive websites that satisfy both users and search engines.

Technical Deep Dive into HTTP/2 Stream Prioritization and Flow Control

A fundamental aspect of efficient HTTP/2 stream prioritization lies in its ability to control the order in which resources are loaded. Each stream in HTTP/2 can be assigned a weight and dependency, allowing the client and server to communicate resource importance. This system helps ensure that vital components, such as the main HTML document or critical CSS, are transmitted before less urgent assets.

Flow control in HTTP/2 complements prioritization by managing how much data can be sent on each stream at any given time. This mechanism prevents any single stream from monopolizing the connection bandwidth, ensuring fair distribution of network resources across multiple streams. Flow control is implemented through window updates that regulate the amount of data a sender can transmit before receiving acknowledgment from the receiver.

Together, prioritization and flow control create a balance that maximizes throughput while minimizing latency. For instance, if a high-priority stream requests the main HTML page, the server can allocate more bandwidth to this stream, accelerating its delivery and improving the overall user experience.

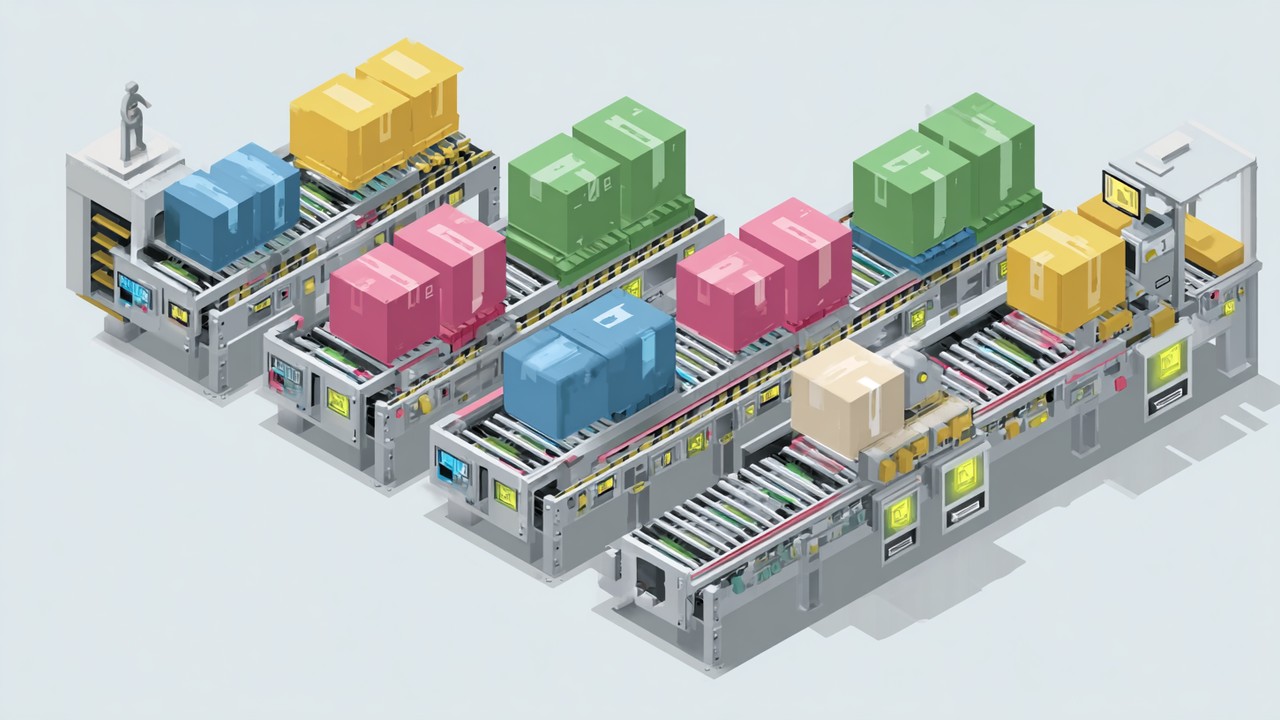

To illustrate, consider a simplified scenario: a browser requests three resources—HTML (high priority), CSS (medium priority), and images (low priority). HTTP/2’s prioritization ensures that the HTML stream is served first, followed by CSS, while images load last. Flow control regulates these streams so no single stream stalls others, maintaining smooth data flow.

These mechanisms are critical in maintaining the efficiency of multiplexed streams. Without proper prioritization, multiplexing alone could lead to resource contention, where less important streams consume bandwidth at the expense of critical ones, negatively impacting TTFB and page load speed.

Visualizing this process, one can imagine a conveyor belt where prioritized packages are placed at the front, while flow control acts as a gatekeeper regulating how many packages pass through at a time. This orchestration maintains the steady and orderly delivery of resources, optimizing web performance.

In conclusion, HTTP/2’s combination of stream prioritization and flow control is essential for managing multiplexed connections effectively. This synergy ensures that critical resources are delivered promptly, enhancing TTFB and overall site responsiveness. Understanding and leveraging these technical features is vital for developers aiming to optimize their HTTP/2 implementations.

Best Practices for Leveraging HTTP/2 Multiplexing to Improve Web Performance

To fully harness the power of HTTP/2 multiplexing and enhance website speed and responsiveness, developers and site owners must adopt targeted strategies that optimize stream management. Effective HTTP/2 performance tuning involves both server and client-side configurations, careful resource prioritization, and continuous monitoring to reduce latency and improve TTFB.

Optimize Server and Client-Side Settings

On the server side, enabling HTTP/2 support is the foundational step. Most modern web servers like Apache, Nginx, and IIS support HTTP/2, but proper configuration is key to unlocking multiplexed connection benefits. For example:

- Enable HTTP/2 with TLS: Since HTTP/2 is predominantly deployed over HTTPS, ensuring robust TLS configurations with modern cipher suites enhances security without sacrificing speed.

- Configure stream concurrency limits: Adjust server settings to allow an optimal number of simultaneous streams per connection, balancing resource availability and load.

- Implement efficient prioritization policies: Servers can be tuned to respect client stream priorities, ensuring critical assets are delivered promptly.

Client-side, minimizing unnecessary requests and bundling resources wisely complements multiplexing. Although HTTP/2 reduces the need for resource concatenation, excessive parallel streams can overwhelm flow control mechanisms and cause performance degradation. Striking a balance is crucial.

Measure TTFB and Multiplexing Effectiveness

Tracking improvements requires reliable measurement tools focused on both TTFB and HTTP/2 multiplexing metrics. Popular web performance tools such as:

- WebPageTest: Offers detailed waterfall charts illustrating how multiplexed streams interact and impact TTFB.

- Chrome DevTools: Provides real-time insights into network requests, stream prioritization, and timing breakdowns.

- Lighthouse: Assesses overall page performance, highlighting areas where HTTP/2 multiplexing benefits can be maximized.

Regularly analyzing these metrics helps identify bottlenecks caused by inefficient stream management or server misconfigurations.

Avoid Common Pitfalls in Multiplexing

While HTTP/2 multiplexing offers many advantages, improper implementation can lead to unintended consequences:

- Inefficient stream prioritization: Without correct prioritization, critical resources may be delayed, nullifying TTFB gains.

- Excessive parallel streams: Opening too many streams simultaneously can overwhelm flow control windows, leading to congestion and increased latency.

- Ignoring legacy HTTP/1.1 clients: Some users may still connect over HTTP/1.1, so fallback mechanisms and optimizations for both protocols are necessary.

Being mindful of these pitfalls ensures smoother transitions to HTTP/2 and sustained performance improvements.

Integrate Multiplexing with Other Optimization Techniques

HTTP/2 multiplexing works best when combined with complementary performance strategies:

- Caching: Leveraging browser and server-side caching reduces redundant requests, easing stream load.

- Content Delivery Networks (CDNs): Distributing content geographically shortens round-trip times, amplifying multiplexing benefits.

- Resource compression and minification: Smaller payloads speed up transmission, making multiplexed streams more efficient.

- Lazy loading: Deferring non-critical resources optimizes stream prioritization and reduces initial TTFB.

Together, these tactics form a holistic approach to web performance, amplifying the advantages of HTTP/2’s multiplexed connections.

Final Recommendations

To optimize HTTP/2 multiplexing effectively, site owners should:

- Ensure HTTP/2 is enabled and properly configured on the server.

- Monitor TTFB and stream activity with specialized tools.

- Prioritize critical resources accurately to avoid delays.

- Manage the number of concurrent streams to prevent congestion.

- Combine multiplexing with caching, CDNs, and compression for maximal impact.

By following these best practices, websites can achieve substantial reductions in TTFB, delivering faster content and improved user experiences that positively influence SEO and engagement.

Evaluating the Impact of Connection Multiplexing on Real-World Website Speed and SEO

The adoption of HTTP/2 and its multiplexed connection capabilities has demonstrable effects on website speed and SEO performance. Studies consistently show that websites utilizing HTTP/2 experience faster TTFB, which correlates strongly with improved search engine rankings and user satisfaction.

Connection Multiplexing’s Influence on SEO Rankings

Search engines prioritize user experience signals such as page load speed and responsiveness. Since connection multiplexing reduces latency by allowing simultaneous data streams, it directly contributes to faster content delivery. This improvement in TTFB is especially important for mobile users or those on high-latency networks, where delays can significantly affect bounce rates and engagement.

Real-world data indicates that sites leveraging HTTP/2 multiplexing often rank higher due to superior loading metrics. Faster TTFB means search engine crawlers receive content quicker, enabling more efficient indexing and better ranking signals.

Enhanced User Experience and Engagement

Beyond SEO, the speed benefits of HTTP/2 multiplexing translate to tangible user experience improvements. Reduced waiting times encourage longer site visits, higher conversion rates, and lower abandonment. Studies show that even milliseconds shaved off TTFB can increase user retention, making multiplexing a valuable tool for business growth.

Comparative Scenarios: With and Without HTTP/2 Multiplexing

When comparing websites with HTTP/2 multiplexing enabled versus those relying on HTTP/1.1 or unoptimized HTTP/2, differences in speed and SEO become apparent:

- Without multiplexing: Multiple TCP connections create overhead, increasing TTFB and slowing resource delivery.

- With multiplexing: Single connections handle many streams efficiently, reducing latency and speeding up page rendering.

This contrast highlights the strategic advantage of adopting HTTP/2 for modern web infrastructure.

Strategic Recommendations for Businesses

For businesses aiming to improve SEO and website speed, transitioning to HTTP/2 with proper multiplexing support is a critical step. It requires investment in server upgrades, configuration tuning, and ongoing performance monitoring but yields significant returns in search rankings and user engagement.

Moreover, integrating multiplexing with other optimization strategies—such as caching, CDNs, and compression—maximizes performance gains.

Key Takeaways on Connection Multiplexing’s Strategic Value

- Connection multiplexing is a foundational element of HTTP/2 that accelerates data transmission by handling multiple streams concurrently.

- This technology significantly reduces TTFB, a vital metric for both SEO and user experience.

- Proper stream management, prioritization, and flow control are essential to fully realize multiplexing benefits.

- Empirical evidence supports the SEO and performance advantages of HTTP/2 multiplexing adoption.

- Businesses enhancing their web infrastructure with HTTP/2 multiplexing position themselves for improved search rankings, faster load times, and better user engagement.

Embracing connection multiplexing as part of a comprehensive web performance strategy is indispensable for organizations seeking competitive advantage in today’s digital landscape.